A long time ago in a blog not very far away, I wrote about containers and at the time struggling to see the actual point to it. For a one man band type deployment it brings a lot of additional places to update (inside the container, and outside), and complexity to handle. The new abstraction was a new way of thinking, and thinking of it like a VM can only get you so far. It also pushes new tooling and ways of doing things, which might not stand the test of time. It seemed to be a solution looking for a problem and had a mental shift needed to get on the learning curve.

However I also missed a large part of the benefit - by trying to boil the ocean as I usually do - and that is delegation of responsibilities. If you take an off the shelf container, someone else is (hopefully) managing the contents of that container. You can also use the same container image for multiple environments, which is better for testing (and even test on demand). You also get clear separation of application from data (and better still, temporary and persistent data separate too). It also lets you get nice version rollback, as the previous container image is likely still on the system, if an upgrade fails you can just restart with the previous image (oh but). You hopefully gain some security benefits from only allowing certain ports to be opened, between certain containers (or the world) and the same for disk access, no more apps sharing the same files for poorly thought out integration. These additional layers to the security stack help.

That’s assuming your container comes from a trusted source, or a source you trust. Unless you build it yourself (boil the ocean) that is. Oh and restarting from a previous container image for rollback isn’t quite so simple with docker as you have to redeploy it. Oh and not all containers seem to like it when the underlying OS has selinux enabled. But tooling can help there right?

The new tooling is always changing too. I went very heavily into ansible for configuraton management, and therefore had to make container (docker at the time) work in that framework. This forced me to go further down that rabbit hole. As docker-compose couldn’t do everything I wanted per container, I had to do my own and either wrap compose, or just do what it does. I ended up not wrapping compose as it was another dependency and I could build everything directly with ansible modules anyway - one tool is better than two. Later on I did see many different projects go the other route - using ansible jinja templates to render docker compose files and then building that with the docker_compose module. Between this and a later project I very much leveled up in ansible and still use it for most automation type tasks. Possible side rant about yaml (I started with the more condensed short form in ansible, which got deprecated forcing migration, but eventually embraced the full form and now everything just works. All those yaml haters haven’t reached enlightenment yet).

Of course I had to embrace other tools too. I swapped to using vagrant for throwaway test machines (way faster than vmware), and even for a heavily customised ontap lab (for automation work). Not that vagrant is free of issues - it has plenty of gotchas (and ruby). It’s fine if you stick within the 80% that everyone uses, but if you try to stray towards the edge of the path (not even off it) you could hit issues which are just not possible to work around. Oh so you want to swap virtualbox out for qemu, well you can’t do multiple disks in a box anymore. Oh but if you want multiple machines behind a nat gateway style network config you can’t do that with virtualbox. TA DA!

Then to improve the vagrant tooling (and return to boiling the ocean of course) I started using Packer to build the base images, and again you hit snags. The config file format has even changed during the time I’ve used it (1.1.3 through current 1.7.10). From json (which sucked as no comment fields were in the schema) to their own language (urg!) hcl2 - but the transition is incomplete and not all features work yet! TADA! Packer can build vagrant images nicely and lets you push to the cloud (their cloud, not a s3 bucket), but hosting locally means more hacky bits (no versioning or local repository). So you end up wrapping that in more shell scripting to handle the extra bits (two providers same box), like I used with ansible to do the extra bits for containers. It’s all just circular cycles of the same. Each loop just changes subtly from the previous.

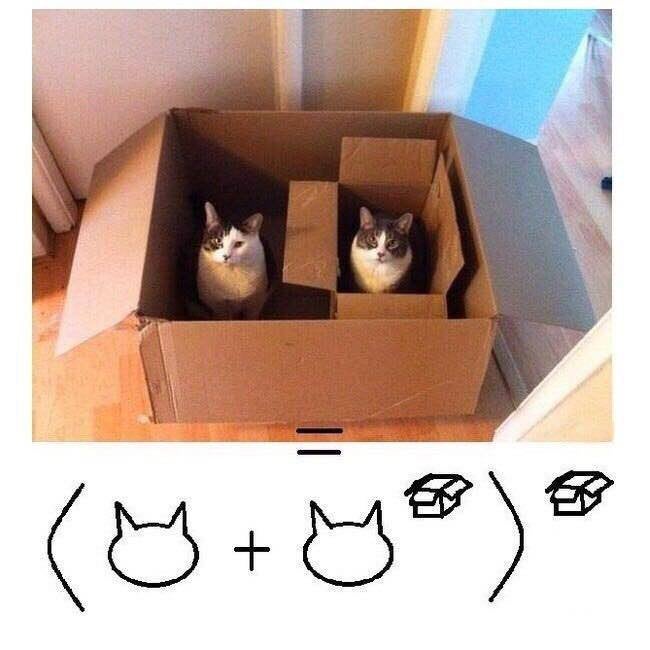

So you end up with a box running containers and another virtual box running containers. Sounds almost like a meme. Oh wait, it’s been done LIKE EVERYTHING.

But so many other things have changed too, which should be the topics of future posts.